mirror of

https://github.com/lephisto/pfsense-analytics.git

synced 2026-01-26 12:14:08 +01:00

123

Docker/docker-compose.yml

Normal file

123

Docker/docker-compose.yml

Normal file

@@ -0,0 +1,123 @@

|

|||||||

|

version: '2'

|

||||||

|

services:

|

||||||

|

|

||||||

|

# MongoDB: https://hub.docker.com/_/mongo/

|

||||||

|

mongodb:

|

||||||

|

image: 'mongo:3'

|

||||||

|

volumes:

|

||||||

|

- 'mongo_data:/data/db'

|

||||||

|

|

||||||

|

# Elasticsearch: https://www.elastic.co/guide/en/elasticsearch/reference/6.x/docker.html

|

||||||

|

elasticsearch:

|

||||||

|

image: 'docker.elastic.co/elasticsearch/elasticsearch-oss:6.8.4'

|

||||||

|

mem_limit: 4g

|

||||||

|

restart: always

|

||||||

|

volumes:

|

||||||

|

- 'es_data:/usr/share/elasticsearch/data'

|

||||||

|

env_file:

|

||||||

|

- ./elasticsearch.env

|

||||||

|

ulimits:

|

||||||

|

memlock:

|

||||||

|

soft: -1

|

||||||

|

hard: -1

|

||||||

|

ports:

|

||||||

|

- '9200:9200'

|

||||||

|

|

||||||

|

# Graylog: https://hub.docker.com/r/graylog/graylog/

|

||||||

|

graylog:

|

||||||

|

build:

|

||||||

|

context: ./graylog/.

|

||||||

|

volumes:

|

||||||

|

- 'graylog_journal:/usr/share/graylog/data/journal'

|

||||||

|

- './service-names-port-numbers.csv:/etc/graylog/server/service-names-port-numbers.csv'

|

||||||

|

env_file:

|

||||||

|

- ./graylog.env

|

||||||

|

links:

|

||||||

|

- 'mongodb:mongo'

|

||||||

|

- elasticsearch

|

||||||

|

depends_on:

|

||||||

|

- mongodb

|

||||||

|

- elasticsearch

|

||||||

|

ports:

|

||||||

|

# Netflow

|

||||||

|

- '2055:2055/udp'

|

||||||

|

# Syslog Feed

|

||||||

|

- '5442:5442/udp'

|

||||||

|

# Graylog web interface and REST API

|

||||||

|

- '9000:9000'

|

||||||

|

# Syslog TCP

|

||||||

|

- '1514:1514'

|

||||||

|

# Syslog UDP

|

||||||

|

- '1514:1514/udp'

|

||||||

|

# GELF TCP

|

||||||

|

- '12201:12201'

|

||||||

|

# GELF UDP

|

||||||

|

- '12201:12201/udp'

|

||||||

|

|

||||||

|

# Kibana : https://www.elastic.co/guide/en/kibana/6.8/index.html

|

||||||

|

kibana:

|

||||||

|

image: 'docker.elastic.co/kibana/kibana-oss:6.8.4'

|

||||||

|

env_file:

|

||||||

|

- kibana.env

|

||||||

|

depends_on:

|

||||||

|

- elasticsearch

|

||||||

|

ports:

|

||||||

|

- '5601:5601'

|

||||||

|

cerebro:

|

||||||

|

image: lmenezes/cerebro

|

||||||

|

ports:

|

||||||

|

- '9001:9000'

|

||||||

|

links:

|

||||||

|

- elasticsearch

|

||||||

|

depends_on:

|

||||||

|

- elasticsearch

|

||||||

|

logging:

|

||||||

|

driver: "json-file"

|

||||||

|

options:

|

||||||

|

max-size: "100M"

|

||||||

|

|

||||||

|

influxdb:

|

||||||

|

image: 'influxdb:latest'

|

||||||

|

env_file:

|

||||||

|

- ./influxdb.env

|

||||||

|

ports:

|

||||||

|

- '8086:8086'

|

||||||

|

volumes:

|

||||||

|

- 'influxdb:/var/lib/influxdb'

|

||||||

|

logging:

|

||||||

|

driver: "json-file"

|

||||||

|

options:

|

||||||

|

max-size: "100M"

|

||||||

|

|

||||||

|

grafana:

|

||||||

|

image: 'grafana/grafana:latest'

|

||||||

|

env_file:

|

||||||

|

- ./grafana.env

|

||||||

|

ports:

|

||||||

|

- '3000:3000'

|

||||||

|

volumes:

|

||||||

|

- 'grafana:/var/lib/grafana'

|

||||||

|

- './provisioning/:/etc/grafana/provisioning'

|

||||||

|

links:

|

||||||

|

- elasticsearch

|

||||||

|

- influxdb

|

||||||

|

depends_on:

|

||||||

|

- elasticsearch

|

||||||

|

- influxdb

|

||||||

|

logging:

|

||||||

|

driver: "json-file"

|

||||||

|

options:

|

||||||

|

max-size: "100M"

|

||||||

|

|

||||||

|

# Volumes for persisting data, see https://docs.docker.com/engine/admin/volumes/volumes/

|

||||||

|

volumes:

|

||||||

|

mongo_data:

|

||||||

|

driver: local

|

||||||

|

es_data:

|

||||||

|

driver: local

|

||||||

|

graylog_journal:

|

||||||

|

driver: local

|

||||||

|

grafana:

|

||||||

|

driver: local

|

||||||

|

influxdb:

|

||||||

|

driver: local

|

||||||

5

Docker/elasticsearch.env

Normal file

5

Docker/elasticsearch.env

Normal file

@@ -0,0 +1,5 @@

|

|||||||

|

http.host=0.0.0.0

|

||||||

|

transport.host=0.0.0.0

|

||||||

|

network.host=0.0.0.0

|

||||||

|

"ES_JAVA_OPTS=-Xms1g -Xmx1g"

|

||||||

|

ES_HEAP_SIZE=2g

|

||||||

1

Docker/grafana.env

Normal file

1

Docker/grafana.env

Normal file

@@ -0,0 +1 @@

|

|||||||

|

GF_INSTALL_PLUGINS=grafana-piechart-panel,grafana-worldmap-panel,savantly-heatmap-panel

|

||||||

7

Docker/graylog.env

Normal file

7

Docker/graylog.env

Normal file

@@ -0,0 +1,7 @@

|

|||||||

|

# CHANGE ME (must be at least 16 characters)!

|

||||||

|

GRAYLOG_PASSWORD_SECRET=somepasswordpepperzzz

|

||||||

|

# Password: admin

|

||||||

|

GRAYLOG_ROOT_PASSWORD_SHA2=8c6976e5b5410415bde908bd4dee15dfb167a9c873fc4bb8a81f6f2ab448a918

|

||||||

|

GRAYLOG_HTTP_EXTERNAL_URI=http://localhost:9000/

|

||||||

|

# TZ List - https://en.wikipedia.org/wiki/List_of_tz_database_time_zones

|

||||||

|

GRAYLOG_TIMEZONE=Europe/Berlin

|

||||||

8

Docker/graylog/Dockerfile

Normal file

8

Docker/graylog/Dockerfile

Normal file

@@ -0,0 +1,8 @@

|

|||||||

|

FROM graylog/graylog:3.1

|

||||||

|

# Probably a bad idea, but it works for now

|

||||||

|

USER root

|

||||||

|

ENV GRAYLOG_PLUGIN_DIR=/etc/graylog/server/

|

||||||

|

RUN mkdir -pv /etc/graylog/server/

|

||||||

|

COPY ./getGeo.sh /etc/graylog/server/

|

||||||

|

RUN chmod +x /etc/graylog/server/getGeo.sh && /etc/graylog/server/getGeo.sh

|

||||||

|

USER graylog

|

||||||

2

Docker/graylog/getGeo.sh

Normal file

2

Docker/graylog/getGeo.sh

Normal file

@@ -0,0 +1,2 @@

|

|||||||

|

curl --output ${GRAYLOG_PLUGIN_DIR}/mm.tar.gz https://geolite.maxmind.com/download/geoip/database/GeoLite2-City.tar.gz

|

||||||

|

tar zxvf ${GRAYLOG_PLUGIN_DIR}/mm.tar.gz -C ${GRAYLOG_PLUGIN_DIR} --strip-components=1

|

||||||

1

Docker/influxdb.env

Normal file

1

Docker/influxdb.env

Normal file

@@ -0,0 +1 @@

|

|||||||

|

INFLUXDB_DB="ndpi"

|

||||||

1

Docker/kibana.env

Normal file

1

Docker/kibana.env

Normal file

@@ -0,0 +1 @@

|

|||||||

|

ELASTICSEARCH_URL=http://elasticsearch:9200

|

||||||

38

README.md

38

README.md

@@ -1,4 +1,8 @@

|

|||||||

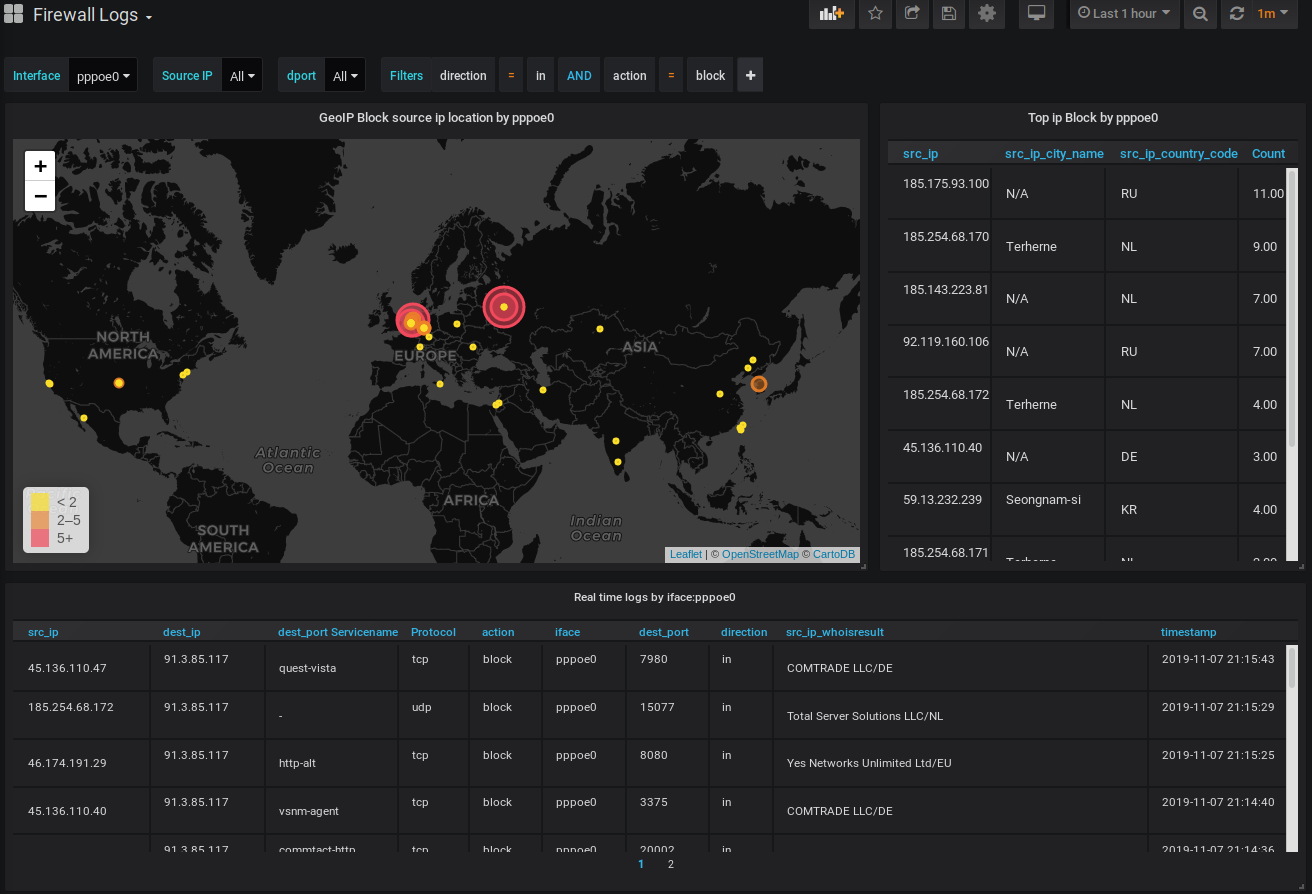

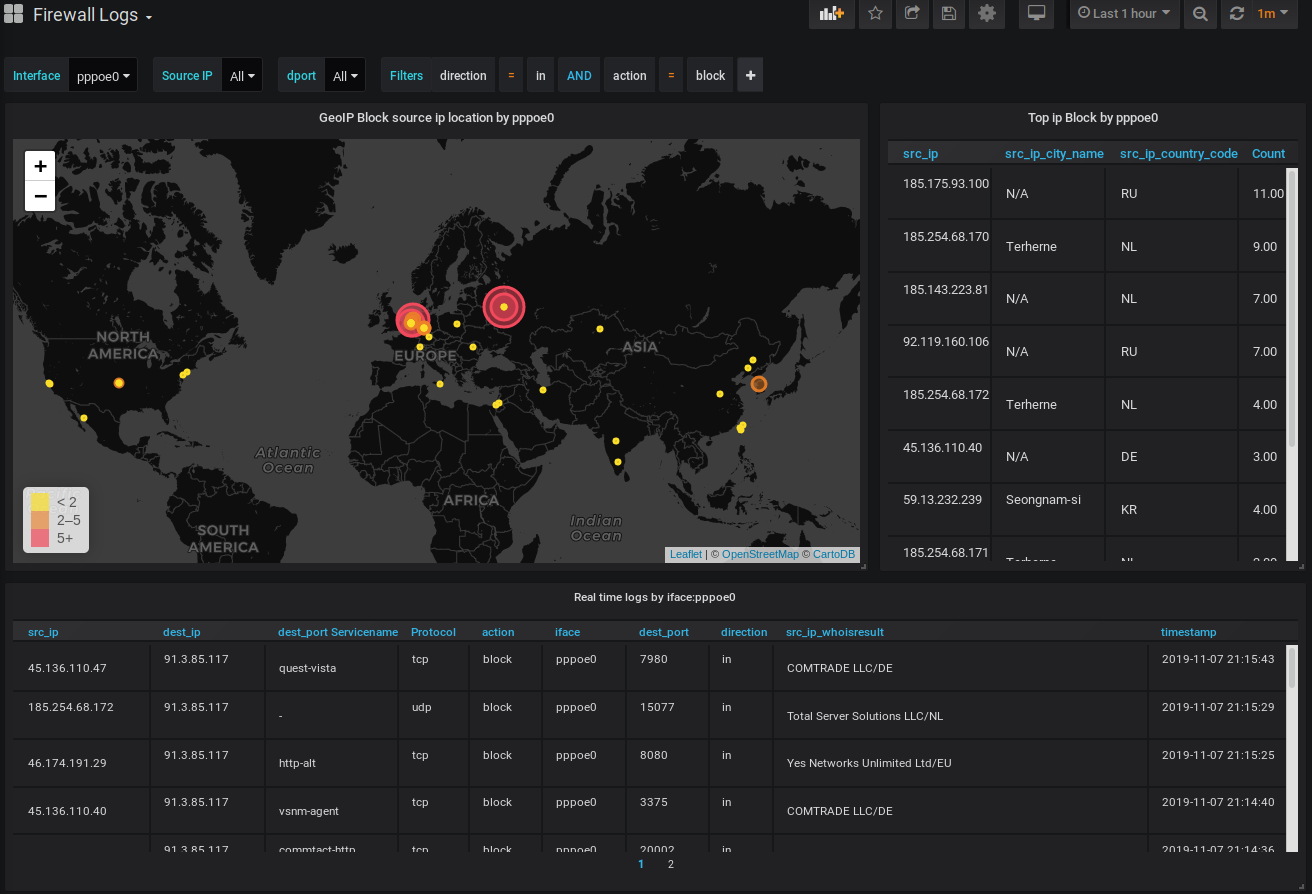

This Project aims to give you better insight of what's going on your pfSense Firewall. It's based on some heavylifting alrerady done by devopstales and opc40772. Since it still was a bit clumsy and outdated I wrapped some docker-compose glue around it, to make it a little bit easier to get up and running. It should work hasslefree with a current Linux that has docker and docker-compose, still there is a number of manual steps required.

|

This is a fork of https://github.com/lephisto/pfsense-analytics

|

||||||

|

|

||||||

|

The original project is really well done but I wanted to organize a few things for clarity and elinimate a few manual steps

|

||||||

|

|

||||||

|

This Project aims to give you better insight of what's going on your pfSense Firewall. It's based on some heavylifting alrerady done by devopstales and opc40772. Since it still was a bit clumsy and outdated I wrapped some docker-compose glue around it, to make it a little bit easier to get up and running. It should work hasslefree with a current Linux that has docker and docker-compose.

|

||||||

|

|

||||||

The whole metric approach is split into several subtopics.

|

The whole metric approach is split into several subtopics.

|

||||||

|

|

||||||

@@ -23,8 +27,6 @@ Firewall Insights:

|

|||||||

Moar Insights:

|

Moar Insights:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

This walkthrough has been made with a fresh install of Ubuntu 18.04 Bionic but should work flawless with any debian'ish linux distro.

|

This walkthrough has been made with a fresh install of Ubuntu 18.04 Bionic but should work flawless with any debian'ish linux distro.

|

||||||

|

|

||||||

# 0. System requirements

|

# 0. System requirements

|

||||||

@@ -42,7 +44,7 @@ sudo apt install docker.io docker-compose git

|

|||||||

Let's pull this repo to the Server where you intend to run the Analytics front- and backend.

|

Let's pull this repo to the Server where you intend to run the Analytics front- and backend.

|

||||||

|

|

||||||

```

|

```

|

||||||

git clone https://github.com/lephisto/pfsense-analytics

|

git clone https://github.com/MatthewJSalerno/pfsense-analytics.git

|

||||||

cd pfsense-analytics

|

cd pfsense-analytics

|

||||||

```

|

```

|

||||||

|

|

||||||

@@ -58,7 +60,10 @@ to make it permanent edit /etc/sysctl.conf and add the line:

|

|||||||

vm.max_map_count=262144

|

vm.max_map_count=262144

|

||||||

```

|

```

|

||||||

|

|

||||||

Next edit the docker-compose.yml file and set some values:

|

Next edit the ./Docker/graylog.env file and set some values:

|

||||||

|

|

||||||

|

Set the proper Time Zone: https://en.wikipedia.org/wiki/List_of_tz_database_time_zones

|

||||||

|

- GRAYLOG_TIMEZONE=Europe/Berlin

|

||||||

|

|

||||||

The URL you want your graylog to be available under:

|

The URL you want your graylog to be available under:

|

||||||

- GRAYLOG_HTTP_EXTERNAL_URI (eg: http://localhost:9000)

|

- GRAYLOG_HTTP_EXTERNAL_URI (eg: http://localhost:9000)

|

||||||

@@ -67,26 +72,21 @@ A salt for encrypting your graylog passwords

|

|||||||

- GRAYLOG_PASSWORD_SECRET (Change that _now_)

|

- GRAYLOG_PASSWORD_SECRET (Change that _now_)

|

||||||

|

|

||||||

|

|

||||||

Now let's pull the GeoIP Database from maxmind:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl --output mm.tar.gz https://geolite.maxmind.com/download/geoip/database/GeoLite2-City.tar.gz

|

|

||||||

tar xfzv mm.tar.gz

|

|

||||||

```

|

|

||||||

|

|

||||||

.. symlink (take correct directory, includes date..):

|

|

||||||

|

|

||||||

```

|

|

||||||

ln -s GeoLite2-City_20191105/GeoLite2-City.mmdb .

|

|

||||||

```

|

|

||||||

|

|

||||||

|

|

||||||

Finally, spin up the stack with:

|

Finally, spin up the stack with:

|

||||||

|

|

||||||

```

|

```

|

||||||

|

cd ./Docker

|

||||||

sudo docker-compose up -d

|

sudo docker-compose up -d

|

||||||

```

|

```

|

||||||

|

|

||||||

|

Note: graylog will be built the first time you run docker-compose. The below step is only for updating the GeiLite DB.

|

||||||

|

To update the geolite.maxmind.com GeoLite2-City database, simply run:

|

||||||

|

```

|

||||||

|

cd ./Docker

|

||||||

|

sudo docker-compose up -d --no-deps --build graylog

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

This should expose you the following services externally:

|

This should expose you the following services externally:

|

||||||

|

|

||||||

| Service | URL | Default Login | Purpose |

|

| Service | URL | Default Login | Purpose |

|

||||||

|

|||||||

@@ -1,117 +0,0 @@

|

|||||||

version: '2'

|

|

||||||

services:

|

|

||||||

# MongoDB: https://hub.docker.com/_/mongo/

|

|

||||||

mongodb:

|

|

||||||

image: mongo:3

|

|

||||||

volumes:

|

|

||||||

- mongo_data:/data/db

|

|

||||||

# Elasticsearch: https://www.elastic.co/guide/en/elasticsearch/reference/6.x/docker.html

|

|

||||||

elasticsearch:

|

|

||||||

image: docker.elastic.co/elasticsearch/elasticsearch-oss:6.8.4

|

|

||||||

mem_limit: 4g

|

|

||||||

restart: always

|

|

||||||

volumes:

|

|

||||||

- es_data:/usr/share/elasticsearch/data

|

|

||||||

environment:

|

|

||||||

- http.host=0.0.0.0

|

|

||||||

- transport.host=0.0.0.0

|

|

||||||

- network.host=0.0.0.0

|

|

||||||

- "ES_JAVA_OPTS=-Xms1g -Xmx1g"

|

|

||||||

- ES_HEAP_SIZE=2g

|

|

||||||

ulimits:

|

|

||||||

memlock:

|

|

||||||

soft: -1

|

|

||||||

hard: -1

|

|

||||||

ports:

|

|

||||||

- 9200:9200

|

|

||||||

# Graylog: https://hub.docker.com/r/graylog/graylog/

|

|

||||||

graylog:

|

|

||||||

image: graylog/graylog:3.1

|

|

||||||

volumes:

|

|

||||||

- graylog_journal:/usr/share/graylog/data/journal

|

|

||||||

- ./service-names-port-numbers.csv:/etc/graylog/server/service-names-port-numbers.csv

|

|

||||||

- ./GeoLite2-City.mmdb:/etc/graylog/server/GeoLite2-City.mmdb

|

|

||||||

environment:

|

|

||||||

# CHANGE ME (must be at least 16 characters)!

|

|

||||||

- GRAYLOG_PASSWORD_SECRET=somepasswordpepperzzz

|

|

||||||

# Password: admin

|

|

||||||

- GRAYLOG_ROOT_PASSWORD_SHA2=8c6976e5b5410415bde908bd4dee15dfb167a9c873fc4bb8a81f6f2ab448a918

|

|

||||||

- GRAYLOG_HTTP_EXTERNAL_URI=http://localhost:9000/

|

|

||||||

- GRAYLOG_TIMEZONE=Europe/Berlin

|

|

||||||

links:

|

|

||||||

- mongodb:mongo

|

|

||||||

- elasticsearch

|

|

||||||

depends_on:

|

|

||||||

- mongodb

|

|

||||||

- elasticsearch

|

|

||||||

ports:

|

|

||||||

# Netflow

|

|

||||||

- 2055:2055/udp

|

|

||||||

# Syslog Feed

|

|

||||||

- 5442:5442/udp

|

|

||||||

# Graylog web interface and REST API

|

|

||||||

- 9000:9000

|

|

||||||

# Syslog TCP

|

|

||||||

- 1514:1514

|

|

||||||

# Syslog UDP

|

|

||||||

- 1514:1514/udp

|

|

||||||

# GELF TCP

|

|

||||||

- 12201:12201

|

|

||||||

# GELF UDP

|

|

||||||

- 12201:12201/udp

|

|

||||||

# Kibana : https://www.elastic.co/guide/en/kibana/6.8/index.html

|

|

||||||

kibana:

|

|

||||||

image: docker.elastic.co/kibana/kibana-oss:6.8.4

|

|

||||||

# volumes:

|

|

||||||

# - ./kibana.yml:/usr/share/kibana/config/kibana.yml

|

|

||||||

environment:

|

|

||||||

- ELASTICSEARCH_URL=http://elasticsearch:9200

|

|

||||||

depends_on:

|

|

||||||

- elasticsearch

|

|

||||||

ports:

|

|

||||||

- 5601:5601

|

|

||||||

cerebro:

|

|

||||||

image: lmenezes/cerebro

|

|

||||||

ports:

|

|

||||||

- 9001:9000

|

|

||||||

links:

|

|

||||||

- elasticsearch

|

|

||||||

depends_on:

|

|

||||||

- elasticsearch

|

|

||||||

|

|

||||||

influxdb:

|

|

||||||

image: "influxdb:latest"

|

|

||||||

environment:

|

|

||||||

- INFLUXDB_DB="ndpi"

|

|

||||||

ports:

|

|

||||||

- "8086:8086"

|

|

||||||

volumes:

|

|

||||||

- influxdb:/var/lib/influxdb

|

|

||||||

grafana:

|

|

||||||

image: grafana/grafana:latest

|

|

||||||

environment:

|

|

||||||

- GF_INSTALL_PLUGINS=grafana-piechart-panel,grafana-worldmap-panel,savantly-heatmap-panel

|

|

||||||

ports:

|

|

||||||

- "3000:3000"

|

|

||||||

volumes:

|

|

||||||

- grafana:/var/lib/grafana

|

|

||||||

- ./provisioning/:/etc/grafana/provisioning

|

|

||||||

links:

|

|

||||||

- elasticsearch

|

|

||||||

- influxdb

|

|

||||||

depends_on:

|

|

||||||

- elasticsearch

|

|

||||||

- influxdb

|

|

||||||

|

|

||||||

# Volumes for persisting data, see https://docs.docker.com/engine/admin/volumes/volumes/

|

|

||||||

volumes:

|

|

||||||

mongo_data:

|

|

||||||

driver: local

|

|

||||||

es_data:

|

|

||||||

driver: local

|

|

||||||

graylog_journal:

|

|

||||||

driver: local

|

|

||||||

grafana:

|

|

||||||

driver: local

|

|

||||||

influxdb:

|

|

||||||

driver: local

|

|

||||||

Reference in New Issue

Block a user