mirror of

https://github.com/lephisto/pfsense-analytics.git

synced 2026-01-26 20:24:11 +01:00

Documentation adjustment

This commit is contained in:

90

README.md

90

README.md

@@ -1,8 +1,67 @@

|

|||||||

We will parse the log records generated by the PfSense Firewall. We already have our graylog server running and we will start preparing the terrain to capture those logs records.

|

This Project aims to give you better insight of what's going on your pfSense Firewall. It's based on some heavylifting done by devopstales and opc40772. I wrapped some docker-compose glue around it, to make it a little bit easier to get up and running. It should work hasslefree with a current Linux that has docker and docker-compose. There are still a number of manual steps required.

|

||||||

|

|

||||||

|

The whole Metric approach is split into several subtopics.

|

||||||

|

|

||||||

|

| Metric type | Stored via | stored in | Visualisation |

|

||||||

|

| ------------- |:---------------------: | --------------: | --------------: |

|

||||||

|

| pfSense IP Filter Log | Graylog | Elasticsearch | Grafana |

|

||||||

|

| NTOP DPI Data | NTOP timeseries export | InfluxDB | Grafana |

|

||||||

|

|

||||||

|

Optional Succicata/SNORT logs can be pushed to Elasticsearch, Graylog has ready made extractors for this, but currently this is not included in this Documentation.

|

||||||

|

|

||||||

|

# System requirements

|

||||||

|

|

||||||

|

Install docker, docker-compose and git.

|

||||||

|

|

||||||

|

# Prepare Docker

|

||||||

|

|

||||||

|

Let's pull this repo to the Server where you intend to run the Analytics front- and backend.

|

||||||

|

|

||||||

|

```

|

||||||

|

git clone https://github.com/lephisto/pfsense-graylog

|

||||||

|

cd pfsense-graylog

|

||||||

|

```

|

||||||

|

|

||||||

|

We have to adjust some Systemlimits to allow Elasticsearch to run:

|

||||||

|

|

||||||

|

```

|

||||||

|

sysctl -w vm.max_map_count=262144

|

||||||

|

```

|

||||||

|

|

||||||

|

to make it permanent edit /etc/sysctl.conf and add the line:

|

||||||

|

|

||||||

|

```

|

||||||

|

vm.max_map_count=262144

|

||||||

|

```

|

||||||

|

|

||||||

|

Next we edit the docker-compose.yml file and set some values:

|

||||||

|

|

||||||

|

The URL you want your Graylog to be available under:

|

||||||

|

- GRAYLOG_HTTP_EXTERNAL_URI (eg: http://localhost:9000)

|

||||||

|

|

||||||

|

A Salt for encrypting your Gralog passwords

|

||||||

|

- GRAYLOG_PASSWORD_SECRET (Change that _now_)

|

||||||

|

|

||||||

|

Now spin up the stack with:

|

||||||

|

|

||||||

|

```

|

||||||

|

docker-compose up

|

||||||

|

```

|

||||||

|

|

||||||

|

This should expose you the following services externally:

|

||||||

|

|

||||||

|

| Service | URL | Default Login | Purpose |

|

||||||

|

| ------------- |:---------------------:| --------------:| --------------:|

|

||||||

|

| Graylog | http://localhost:9000 | admin/admin | Configure Data Ingestions and Extractors for Log Inforation |

|

||||||

|

| Grafana | http://localhost:3000 | admin/admin | Draw nice Graphs

|

||||||

|

| Cerebro | http://localhost:9001 | none - provide with ES API: http://elasticsearch:9200 | ES Admin tool. Only required for setting up the Index.

|

||||||

|

|

||||||

|

Depending on your Hardware after a few minutes you should be able to connect to

|

||||||

|

your Graylog Instance on http://localhost:9000. Let's see if we can login with username "admin", password "admin".

|

||||||

|

|

||||||

# Indices

|

# Indices

|

||||||

|

|

||||||

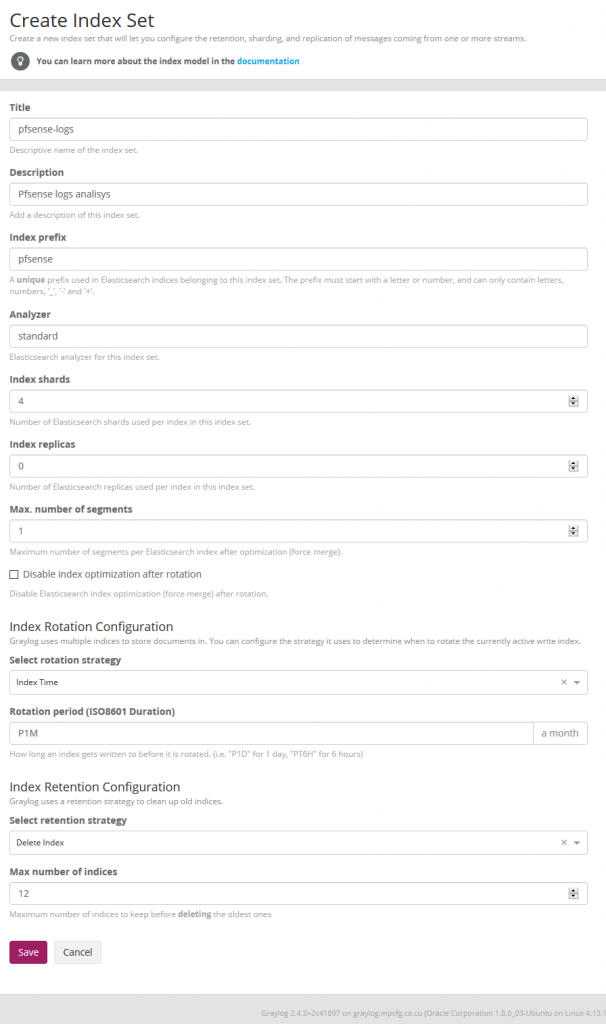

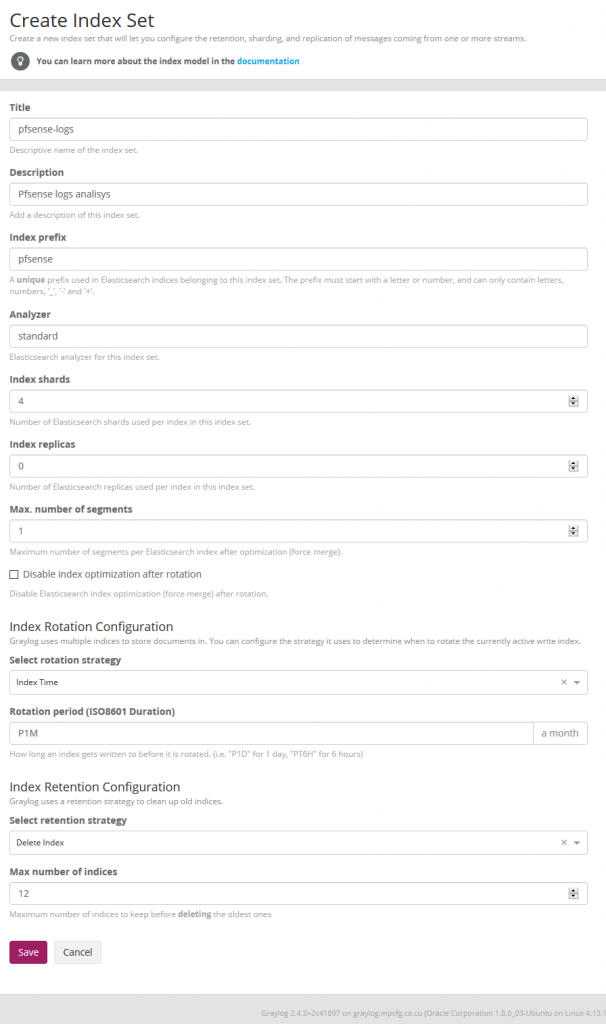

The procedure is similar to the one we did for zimbra or squid. We now create the Pfsense index in System / Indexes

|

We now have to create the Indices in Elasticsearch for the Pfsense logs in System / Indexes

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -10,25 +69,15 @@ Index shard 4 and Index replicas 0, the rotation of the Index time index and the

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

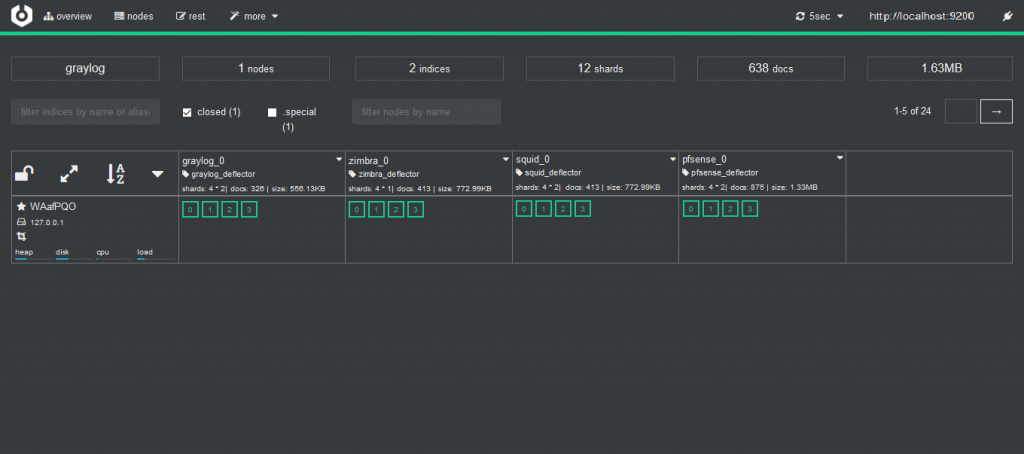

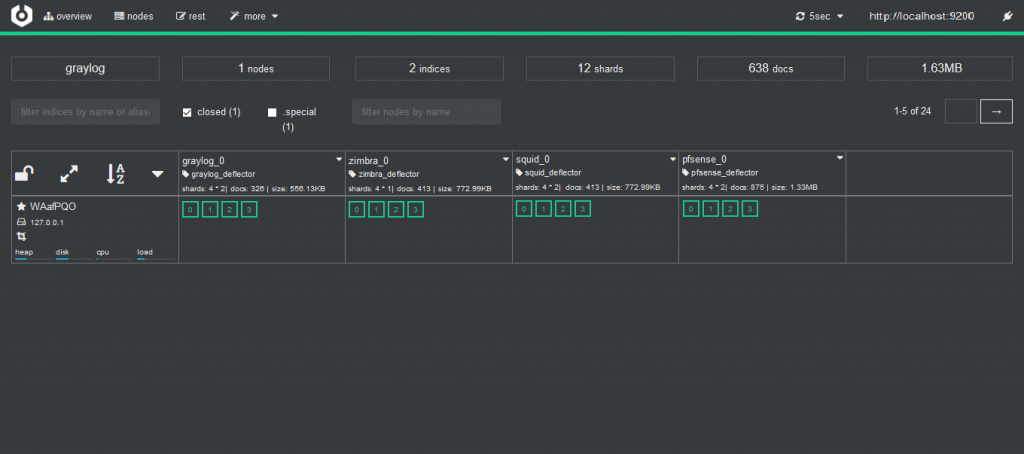

and with [cerebro](https://github.com/lmenezes/cerebro) we can check it

|

and with [cerebro](https://github.com/lmenezes/cerebro) we can check it. You can access Cerebro under http://localhost:9001 and enter "http://elasticsearch:9200" as URL.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

# Content Pack

|

# Content Pack

|

||||||

|

|

||||||

This content pack includes Input rsyslog type , extractors, lookup tables, Data adapters for lockup tables and Cache for lookup tables. To download these files we install git to clone the repository.

|

This content pack includes Input rsyslog type , extractors, lookup tables, Data adapters for lockup tables and Cache for lookup tables.

|

||||||

|

|

||||||

`#apt-get install git`

|

We have to import the file from the Content Pack folder and for them we select in the System / Content Packs the option Import content packs to upload the file.

|

||||||

|

|

||||||

and then we cloned it

|

|

||||||

|

|

||||||

`#git clone https://github.com/devopstales/pfsense-graylog`

|

|

||||||

|

|

||||||

We will locate the CSV data of the lookup tables to later convert the number of ports to services name. From the git that you just cloned, we selected the service-names-port-numbers.csv file and copied it to /etc/graylog/server.

|

|

||||||

|

|

||||||

`#cp service-names-port-numbers.csv /etc/graylog/server`

|

|

||||||

|

|

||||||

We now import the file from the Content Pack folder and for them we select in the System / Content Packs the option Import content packs to upload the file.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -69,9 +118,9 @@ In the name we fill it with pfsense-custom and open the git file that has the te

|

|||||||

|

|

||||||

And then we press the create button.

|

And then we press the create button.

|

||||||

|

|

||||||

Now we will stop the graylog service to proceed to eliminate the index through Cerebro.

|

_!!! IMPORTANT: Now we will stop the graylog service to proceed to eliminate the index through Cerebro._

|

||||||

|

|

||||||

`#systemctl stop graylog-server.service`

|

`#docker-compose stop graylog`

|

||||||

|

|

||||||

In Cerebro we stand on top of the index and unfold the options and select delete index.

|

In Cerebro we stand on top of the index and unfold the options and select delete index.

|

||||||

|

|

||||||

@@ -79,7 +128,7 @@ In Cerebro we stand on top of the index and unfold the options and select delete

|

|||||||

|

|

||||||

We start the graylog service again and this will create the index with this template.

|

We start the graylog service again and this will create the index with this template.

|

||||||

|

|

||||||

`#systemctl start graylog-server.service`

|

`#docker-compose stop graylog`

|

||||||

|

|

||||||

Pipelines

|

Pipelines

|

||||||

|

|

||||||

@@ -140,8 +189,3 @@ We upload the downloaded file Upload .json file and associate it with the dataso

|

|||||||

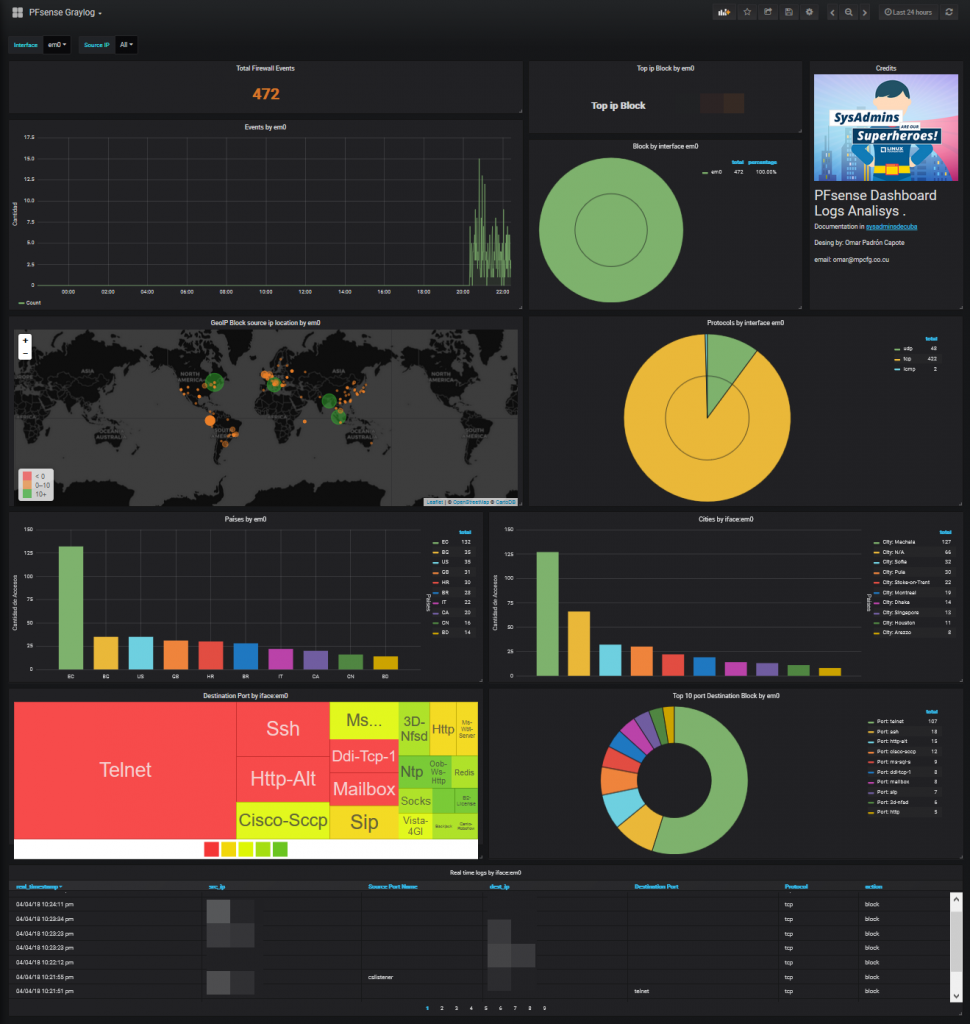

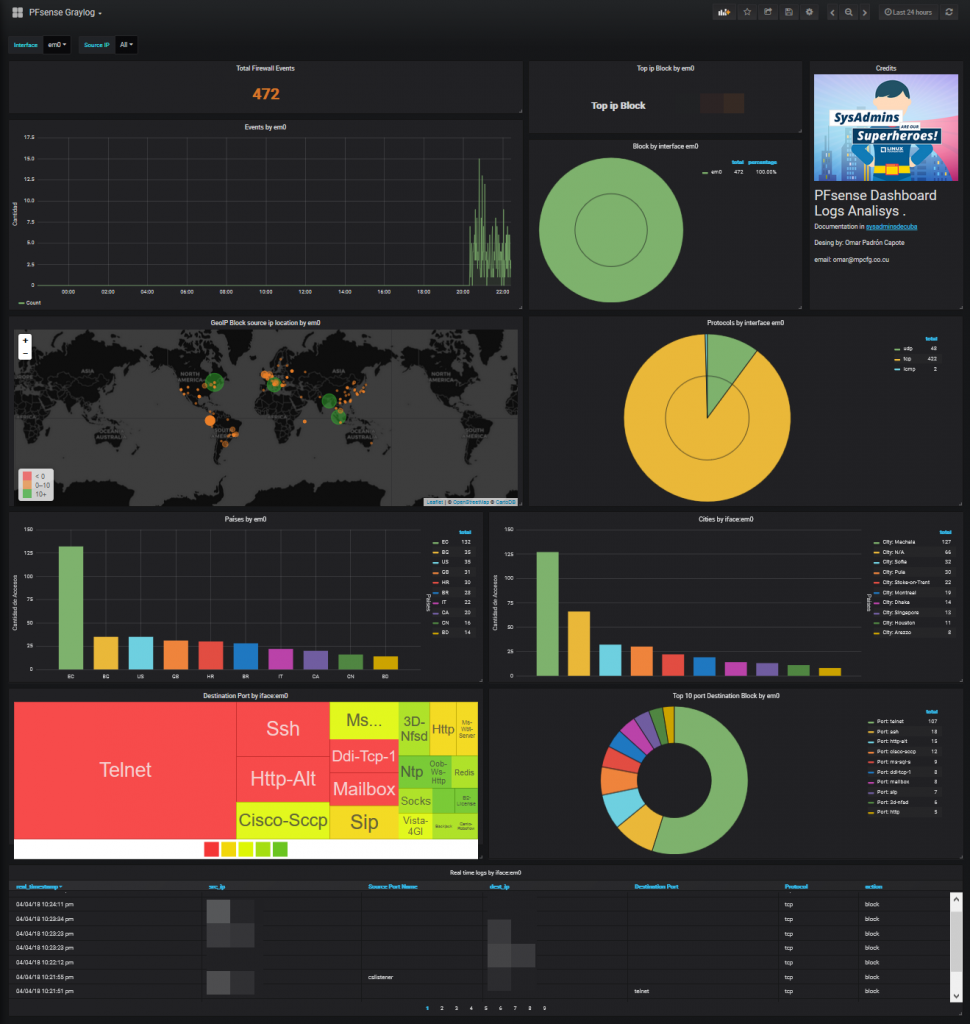

We can already see the dashboard in action.

|

We can already see the dashboard in action.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

Reference in New Issue

Block a user